Scientists at UCLA and the Technion, Israel’s Institute of Technology, have uncovered how our brain cells encode the pronunciation of individual vowels in speech.

The discovery could lead to new technology that verbalizes the thoughts of people unable to speak because of paralysis or disability.

Published in the latest edition of the journal Nature Communications, the study showed that different parts of the brain are activated when different vowels are pronounced.

Related Stories:

- Will The Blind See? Israeli Startup Might Be The First To Make It Happen

- Rabbi With ALS Able To Walk And Talk Again After New Treatment

The study was conducted by Prof. Shy Shoham and Dr. Ariel Tankus of the biomedical engineering faculty at Haifa’s Technion and Prof. Itzhak Fried of Tel Aviv Sourasky Medical Center and the neurosurgery department at the University of California at Los Angeles.

“We know that brain cells fire in a predictable way before we move our bodies,” said Dr. Itzhak Fried, a professor of neurosurgery at the David Geffen School of Medicine at UCLA. “We hypothesized that neurons would also react differently when we pronounce specific sounds. If so, we may one day be able to decode these unique patterns of activity in the brain and translate them into speech.”

Mapping brain activity

Fried and the Technion’s Dr. Ariel Tankus, followed 11 American patients at UCLA, who suffered from epilepsy that could not be controlled with medications. As part of the study, electrodes were implanted in their brains to pinpoint the damaged part of the brain that caused their seizures.

The researchers recorded neuron activity as the patients uttered one of five vowels, or syllables containing the vowels. When they voiced different vowels, mathematical algorithms were used to see how the section of the brain reacted.

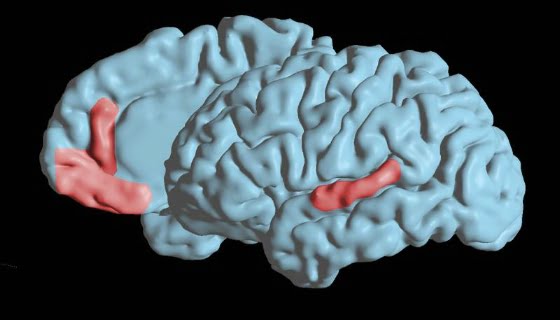

With Prof. Shy Shoham from the Technion, the team studied how the neurons encoded vowel articulation at both the single-cell and collective level. The scientists found two areas — the superior temporal gyrus and a region in the medial frontal lobe — that housed neurons related to speech and attuned to vowels. The encoding in these sites, however, unfolded very differently.

Sign up for our free weekly newsletter

SubscribeNeurons in the superior temporal gyrus responded to all vowels, although at different rates of firing. In contrast, neurons that fired exclusively for only one or two vowels were located in the medial frontal region. “We found neurons that react to specific vowels,” said Shoham in an interview for the Jerusalem Post.

Neurons’ encoding of vowels in the superior temporal gyrus reflected the anatomy that made speech possible — specifically, the tongue’s position inside the mouth.

Re-enabling Speech

“Once we understand the neuronal code underlying speech, we can work backwards from brain-cell activity to decipher speech,” said Fried. “This suggests an exciting possibility for people who are physically unable to speak. In the future, we may be able to construct neuro-prosthetic devices or brain-machine interfaces that decode a person’s neuronal firing patterns and enable the person to communicate.”

In other words, if the whole range of vowels and consonants can be recognized as a structured code, patients might be able to communicate merely by trying to speak, even without the ability to produce sounds.

Researchers now hope to develop better interface that will connect between the brain and any computer, thus allowing to “translate” brain signals into artificial speech.

“For the speech interface, we would need electrodes only to record and not to stimulate – unlike an electrode to permanently stimulate a part of the brain for Parkinson’s disease. Thus our device would require less power and could be wireless,” Shoham explained.

The study was supported by grants from the European Council, the U.S. National Institute of Neurological Disorders and Stroke, the Dana Foundation, and the Lady David and L. and L. Richmond research funds.

Photo by UCLA/Fried Lab

Related posts

Israeli Medical Technologies That Could Change The World

Harnessing Our Own Bodies For Side Effect-Free Weight Loss

Missing Protein Could Unlock Treatment For Aggressive Lung Cancer

Facebook comments