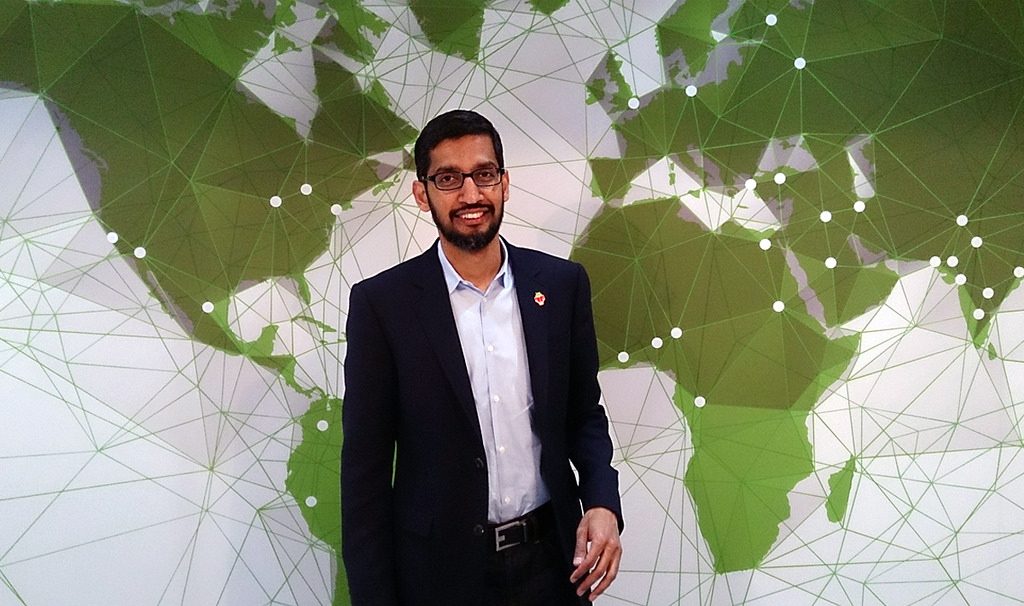

On stage this month at Google’s annual I/O developer conference in Mountain View, California, the tech giant’s CEO Sundar Pichai aired a recorded phone call of a person making a haircut appointment.

Person 1: Hello, how can I help you?

Person 2: Hi, I’m looking to book a women’s haircut for a client. Um, I’m looking for something on May 3rd

Person 1: Sure, give me one second

Person 2: Mm…hmm

Person 1: Sure, what time are you looking for around?

Person 2: At 12:00 pm

Person 1: We do not have a 12:00 pm available. The closest we have to that is a 1:15 pm

Person 2: Do you have anything between, uh, 10:00 am and 12:00 pm?

Person 1: Depending on what service she would like. What service is she looking for?

Person 2: Just a women’s haircut for now

Person 1: Ok, we have a 10 o’clock

Person 2: 10:00 am is fine

Person 1: Okay, what’s her first name?

Person 2: The first name is Lisa

Person 1: Okay, perfect. So I will see Lisa at 10:00 am on May 3rd

Person 2: Okay, great, thanks

Person 1: Okay, have a great day, bye

https://www.facebook.com/circuitbreaker/videos/2045943969031755/

The conversation between two female-sounding voices went on for 56 seconds and was peppered with the pauses and sounds of a normal conversation, including “ums” and “uhs” and the often agreeable “mm hmm.” But the person making the appointment was not a person at all. The exchange was a computer-human interaction that sounded like a real-life conversation, and the computer on the other end was the newest phase of the Google Assistant, Google’s rival to Apple’s Siri and Amazon’s Alexa.

Google, Pichai said, has been working on this technology for many years. And it was developed by engineers mainly in Tel Aviv. Dubbed Google Duplex, it “brings together all our investments over the years [including in] natural language understanding, deep learning, [and] text-to-speech,” Pichai said.

Made in Israel

Google Duplex was unveiled this month, and the key people behind the technology are Yaniv Leviathan, principal engineer, and Yossi Matias, vice president of engineering and the managing director of Google’s R&D Center in Tel Aviv. According to Wired magazine, the experimentation with the technology started several years ago as a 20-percent project – an initiative that encouraged employees to spend about 20 percent of their time on their own ideas, and which was reportedly scrapped in 2013.

In a post dated May 8, 2018 on the Google AI blog, Matias and Leviathan explained the technology in depth.

Duplex, they wrote, was “a new technology for conducting natural conversations to carry out ‘real world’ tasks over the phone. The technology is directed towards completing specific tasks, such as scheduling certain types of appointments. For such tasks, the system makes the conversational experience as natural as possible, allowing people to speak normally, like they would to another person, without having to adapt to a machine.”

SEE ALSO: Meet Google Israel’s Yossi Matias, The Genius Behind Many Of Google’s Most Stunning Achievements

For now, this means that Duplex can carry out very specific tasks, like making appointments, fully autonomously, but only “after being deeply trained in such domains,” they write. “It cannot carry out general conversations.”

To demonstrate this, a second recorded conversation for an attempted dinner reservation between a male-sounding voice (the Google Assistant) and a female-sounding respondent with a notable accent was aired at the same Google I/O conference. The exchange “went a bit differently than expected,” said Pichai, when the respondent tried to communicate that the caller did not need a reservation. Still, the Assistant appears to understand the concept and concludes with “oh, I gotcha.”

“We’re still developing this technology, and we’re actually working hard to get this right,” Pichai said after the second demo. “We have many of these examples when the calls don’t quite go as expected. But the Assistant knows the context and nuance. It knew to ask for wait times in this case, and it handled the interaction gracefully.”

But it’s highly likely that Google didn’t invest millions and years for the assistant to just book tables and set appointments times, and has its eye on bigger targets.

Leviathan and Matias say they needed to consider several challenges for Duplex to work.

Natural language, they explain, can be hard to understand and tricky to model. “Latency expectations require fast processing, and generating natural sounding speech, with the appropriate intonations, is difficult,” they write.

When people talk to each other in a natural setting, they use sentence structures that are complex, speak faster than when addressing a computer, sometimes correcting themselves mid-sentence and relying on context when they omit words. They can also express a wide range of intent, for which Leviathan and Matias give the following example: “‘So umm Tuesday through Thursday we are open 11 to 2, and then reopen 4 to 9, and then Friday, Saturday, Sunday we… or Friday, Saturday we’re open 11 to 9 and then Sunday we’re open 1 to 9.'”

While Duplex is able to carry out some sophisticated interactions, largely with full autonomy, Leviathan and Matias say the system also has “a self-monitoring capability, which allows it to recognize the tasks it cannot complete autonomously (e.g., scheduling an unusually complex appointment), and “in these cases, it signals to a human operator, who can complete the task.”

How it works

At the core of the technology, Matias and Leviathan say, “is a recurrent neural network (RNN) designed to cope with these challenges, built using TensorFlow Extended (TFX),” an end-to-end machine learning platform built on Google’s own TensorFlow tech. TensorFlow is “an open-source software library for dataflow programming.”

Sign up for our free weekly newsletter

SubscribeDuplex was essentially trained on anonymized phone conversation data – voicemails and Google Voice conversations – “as well as features from the audio, the history of the conversation, the parameters of the conversation (e.g. the desired service for an appointment, or the current time of day) and more.”

During the training, the system was supervised by operators who acted as instructors and could “affect the behavior of the system in real time as needed.” This went on “until the system performs at the desired quality level, at which point the supervision stops and the system can make calls autonomously,” they write.

Google is planning an experimental initial release to a “small” number of people, according to CNet, later this year, as Matias and Nick Fox, vice president of product and design for Google Assistant and Search tell the publication that Google wants to “proceed with caution” with the new technology.

In the limited rollout, that small group of people will be able to make dinner reservations, hair appointments, as well as check holiday hours, information which Duplex can then make available online “reducing the number of such calls businesses receive, while at the same time making the information more accessible to everyone,” Matias and Leviathan explain.

Through Google Assistant – on mobile only at first – the calls will happen in the background, without user involvement, and will come from Google’s back-end system through an unspecified number.

Matias and Leviathan suggest a number of benefits to the technology, including the ability to enable “delegated communication with service providers in an asynchronous way, e.g., requesting reservations during off-hours, or with limited connectivity,” and helping address “accessibility and language barriers, e.g., allowing hearing-impaired users, or users who don’t speak the local language, to carry out tasks over the phone.”

Scott Huffman, VP of Engineering for the Google Assistant wrote in a post this month that the technology will also help small businesses that don’t have online booking systems and who rely on phone reservations.

“Many people simply don’t reserve with businesses that don’t take online bookings. With Google Duplex, businesses can operate as they always have; and people can easily book with them through the Google Assistant,” he wrote.

What about privacy and security?

Like nearly everything Google does, Duplex is raising privacy and security concerns. For the technology to be developed, Google had to tap into an incredible amount of personal data. Arguably, it had the permissions which users opt-in for when setting up the Google Assistant, including web and app activity (search history and content), device information (contacts, calendars, and installed apps), and voice and audio activity, which records voice and audio input to improve speech recognition.

But there are also ethical issues surrounding two-party consent laws, where one party does not know they are being recorded, and additional privacy concerns, where the person on the other line does not know they are speaking to a robot. The latter outraged privacy advocates and tech watchers.

Zeynep Tufekci, a prominent Turkish techno-sociologist wrote a Twitter thread blasting Google.

That the “Google Assistant making calls pretending to be human not only without disclosing that it’s a bot, but adding “ummm” and “aaah” to deceive the human on the other end with the room cheering it,” she said, was “horrifying.”

“Silicon Valley is ethically lost, rudderless and has not learned a thing,” she charged.

With the tech world still reeling from massive security breaches, including Facebook’s Cambridge Analytica scandal, it seems Google was listening.

The tech giant has since said that it was “designing this feature with disclosure built-in, and [will] make sure the system is appropriately identified. What we showed at I/O was an early technology demo.”

But there are a number of unanswered questions about how the tech will work – including how to prevent spam calling and other potential exploits – and, as Android Central points out, “if/when Duplex becomes an actual consumer product for anyone to use, expect it to be criticized and challenged in courts.”

Google Assistant is currently available on more than 500 million devices, including phones, connected home devices, and cars. By the end of 2018, it expects to be available in more than 30 languages in 80 countries.

Related posts

Editors’ & Readers’ Choice: 10 Favorite NoCamels Articles

Forward Facing: What Does The Future Hold For Israeli High-Tech?

Impact Innovation: Israeli Startups That Could Shape Our Future

Facebook comments